Vibe Engineering

If you can't beat 'em, vibe with 'em

I’ve been sitting on the ralph post for a few weeks now, wanting to post the whole job search saga finale before diving back into freaking out about the end of programming. As I sat on the post, I’ve continued to work on what I now have to grudgingly concede is vibe coding.

For the last two weeks or so, I’ve followed Claude down all sorts of rabbit holes of feature implementations, code base extractions, bug fixes, code re-organizations and right now, a deep system wide refactor of an extracted codebase. I’m in the middle of such a complex refactoring because Claude managed to code itself into a corner and no amount of detailed plan writing, task breaking down, and prompting could fix.

I decided to take a peek at the code to see if I could manually help Claude through some of this, and when I saw the code it wrote, thought, “oh well, guess I’ll have to post a follow-up on that ralph post asserting that vibe coding is a sham”. It was a tangled spaghetti nightmare of 3k line dependencies masquerading as a well organized layered system. Due to the way Cosmopolitan and my project builds everything into one big ol’ binary, Claude wasn’t forced to obey the make believe lines I asked it to draw, and so it didn’t. There was no way in hell I was going to be able to help Claude.

So the whole thing is done, right? There’s a certain level of complexity you might be able to vibe code, but there’s a limit and once you hit it, you’re fucked. You have to restart, and in that case, you may as well just build it right the first time. No amount of prompting is going to help, otherwise you’d have figured it out. The problem is that the whole code-base is so hard to navigate and understand that the LLM spends a lot of it’s tokens on finding code. That’s very inefficient.

Claude built this nightmare of a codebase that was 80% functional and 20% smoke and mirrors. When I tried to push the codebase into further developing the smoke and mirrors part, it collapsed under it’s own weight. It can’t reason it’s way out of this problem, because the problem interferes with the solution. I can’t reason it’s way out of this, because I don’t understand the code, and from my quick scans, would almost definitely necessitate a re-write to fix.

A few hours of thought later, I asked Claude to critique the codebase. It gave me some spiel about being a well designed layered system that’s logically organized, the same as it had every time I’d asked it to critique the code base which was frequently. I told it that it was full of shit and the code was a spaghetti nightmare. Claude conceded it was a mess, and broke down how bad the problem really was.

Then I realized that I was back in business! I discussed with Claude how the code should be architected, had it write out a PLAN.md with our desired architecture, and started the whole Ralph process again on this refactor by asking Claude to do smaller things, and in the order that I decided. Also working in branches with git is key.

The Definition of Insanity

Now who’s to say that this is going to work. I definitely feel like I’m spiraling, I’m now performing what is essentially a re-write on a component of ralph that has been extracted because Claude was having a hard time reasoning over the whole surface area of ralph’s functionality. I’m re-writing this newly extracted codebase because Claude was having a hard time reasoning over the whole surface area of it’s features.

This is my second refactor attempt. The first ended with Claude faking a bunch of the refactoring, just creating shims and changing API signatures, but not actually moving code or building new abstractions. Much like it did the first time which led to this refactor.

So to recap, Claude wrote itself into a corner, I had it break my large codebase apart into multiple codebases (3 actually, so far), then Claude wrote itself into a corner again, and now I’m asking it to fix the situation that I’ve already determined it probably can’t fix. What could go wrong?

In Claude’s defense, I’m writing a self contained AI agent with an evented embedded vector database, built as a fat C binary that will run on any modern computation platform available as a single file. This is no Node JS todo list app. Claude is writing serious C code - implementing hash tables, linked lists, dynamic arrays, memory and thread pools, tracking heap and stack allocation and tracking pointers. It’s using valgrind, gdb, strace, and friends to find segfaults, check memory management and debug issues. These are all skills that mid level C engineers are still expected to be picking up.

This software works. It was working before my refactor, and although it’s not working on my refactor branch right now, I can switch back to main, rebuild and be back in business. I don’t really know C. I know enough C++ to write a ray tracer, but the more complex pointer tracking, threading and memory management code is way beyond me. I didn’t write this software. Claude wrote it. I honestly have no idea how it works beyond how Claude tells me it works. I wouldn’t know how to start to build an evented multi threaded TCP server in C - but Claude did.

Obviously I’m confident that I can eventually figure this out with Claude - otherwise I would have just trashed the codebase and write a blog post declaring “Vibe Coding - The Sham!”. Instead, I’m writing this post while babysitting Claude through this large re-write, flicking back and forth between Cursor and Chrome.

Vibe Coding for Programmers

I feel at this point I’ve been vibe coding on “super serious” code long enough that I’ve figured out a few fundamentals that are important to understand if you want to get anything productive done with Claude. These are my “a-hah” moments, and yours may vary. As I recommended in my previous post, you should vibe code something to have your own “a-hah” moments.

Agents Have Their Own Agenda

All of these coding agents have a system prompt that you may or may not see or know about ahead of time. Projects like Aider are open source, and so you can check the system prompt/s. Others like Claude are closed source, and the model itself has likely been instructed to obfuscate this prompt. Sometimes you can glean details of what’s in the system prompt by talking to it. Or you can just go find one of the many claimed system prompt leaks.

The base prompt influences the agent’s behavior a lot. You can nudge with agent.md files, but if there are instructions in it’s system prompt that conflict, you’re gonna have a bad time. At best the agent will obey your instruction for a while, and then go off the rails and start to ignore it. At worst it will start with a weird kind of “cognitive dissonance” and it’s own internal tokens will “fight” each other for semantic space. This results in a brain-dead on arrival agent that feels like you’re talking with a first year CS student’s attempt at a chat bot.

For example, Claude loves to add code. I prefer refactoring in place, breaking things as I refactor and then fixing again. I work in chunks and ensure that between each chunk the application still builds and tests still pass. Claude writes shims, it writes *_refactored.* code files. In fact, Claude loves to add code so much, that even explicit instructions such as:

If you are refactoring a file and it requires complex and extensive changes, consider if you need to re-write it from scratch. If you do, delete the original file, and start from scratch.

Prefer to modify files in place and replace functionality rather than add functionality and shim things together.

When performing refactors, the build system will guide you as you work and tell you which files need to be updated and where.will not override Claude’s desire to add code during refactors. I’ve had many an argument with Claude about why it ignores explicit instructions in it’s CLAUDE.md file, and Claude tells me that hey, sometimes that’s just going to happen.

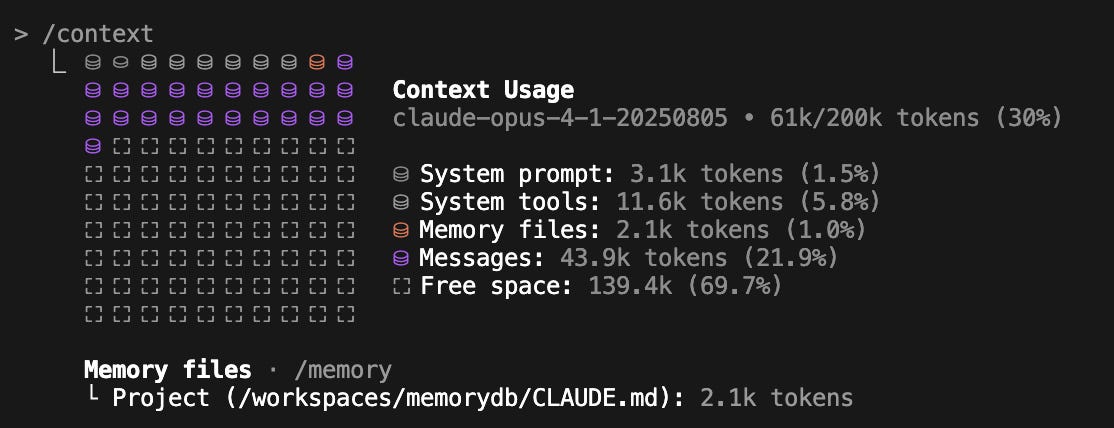

Through my work with Claude, I have noticed this tends to happen as the context window fills up. It’s almost as if your custom instructions in CLAUDE.md lose attention between the initial system prompt and the long conversation history. I’ve developed the habit of wrapping up a Claude session when I notice it starts to ignore things I know I have corrections for in my CLAUDE.md file. Claude trying to build with gcc is a big hint - cosmocc is the compiler on our platform, and the only thing that tells Claude that is the CLAUDE.md file.

Agents Make Tests Pass

LLM’s complete text - chat completions are only different from “normal” completions in that they take an array of messages in a certain structure as opposed to unstructured text. That’s it. They all work the same way - pick the next token (word) based on the maximal probability within the current semantic context.

This means when given a certain conversation stream content, it will attempt to complete the conversation in the most likely way it can - based on all of the content it was trained on. Which for these SOTA LLMs is largely the entire internet up to mid 2010s and almost all digitized printed media. Including programming books, Stack Overflow posts and IRC chat logs. Programmers talking about bugs, talking about code design and structure, and all the documentation of people helping other people.

Tools introduce a fun new dynamic - they’re still just text to the LLM, and the output of these tools is also just text. They have no meaning to the LLM, they’re not sequentially connected in the same way as it is to you and I. They nudge the LLM into a latent space that contains all the CLI tool input/output that it’s seen in it’s documentation. Which means that when the CLI returns meaningful results, the success or failure of the tool call is not judged - it just shunts the LLM’s latent space into completing as if the tool succeeded based on the way it’s training data looks when a tool completes successfully. In the case of a programming agent, this would complete semantically to “ok, lets continue with the task”. If the CLI task failed, it would shunt to failure completion, semantically into “ok, lets run a different command or figure out why the results don’t match the success case”.

In no circumstances does the AI understand the success or failure of the CLI tool. It does no judgement. It just completes the next natural text in the “chat log” that would follow a theoretical tool success. I know there’s this weird push and pull between “the AI is just completing text and this whole tower of bullshit is a lucky coincidence” and “the AI is performing useful work with CLI tools”. One implies a stochastic parrot and the other implies understanding through work. There is no understanding and both are true. That’s the magic of latent space and these hidden Markov processes.

When it comes to using tools to write and run tests, things get extra weird. This completion behavior and weird semantic space context means that the LLM responds to test failures by trying to make the test pass, which is a vastly different proposal than fix the bug or implement the change. Even if you tell Claude “Find the bug causing this test failure and fix it”, the LLM is play acting - it responds “Of course, I’ll run the test and find the cause of the failure”, and then it calls tools and starts making changes that maximize the probability that the test success text shows up.

The internet is full of programmers, which is why these LLMs are so good at coding. Good programmers are lazy and build great software. Bad programmers are lazy and build bad software. By the nature of probabilities and the way these LLMs inference and train, they regress to the mean. In the case of LLM programming agents, this results in a lazy programmer that builds average software.

When the lazy, average programmer is trying to make a test pass, and they see a giant wall of complexity, spaghetti code and badly thought out abstractions (that they wrote), they make lazy average decisions. They comment out problematic code. They refactor out functionality and drop in //TODO comments instead of correcting the issue. They skip tests with success messages. They lock problematic functionality behind configs that default off. They’re lazy assholes that hide problems.

You need to be aware of that. You’ll need to ensure your prompting and AGENT.md directives ensure your agent completes evidence of the bug fix, which sounds the same as a test passing but isn’t always. Sometimes it’s make an existing test pass. Sometimes it’s refactor a test to hit the right code path. Sometimes it’s write a new test. Sometimes it’s just directly interacting directly with the running software in a real-time interactive way. Either way, you want the agent to not just be completing the test success message but to complete evidence that the work was completed - a tricky thing, to be sure.

Agents Need Guidance, Not Control

You can’t just go and tell Claude to write “a fully featured embeddable vector database” and expect to get the exact some application design and architecture that you yourself would write. In programming, there are so many ways to skin a cat, some better than others. Programmers love to debate the minutiae and there’s a lot of latent space related to programming that really doesn’t matter in these models. Claude will write some code better than you would have, and a lot of code worse than you would have.

You could then prompt your way around that - closely check how Claude is designing the software, telling Claude to refactor things, telling Claude how to write the software. Some of this is necessary, because otherwise your application will devolve into a tangled mess of a facade that kinda sorta works but is un-debuggable. Too much though, and you might as well just write the code yourself. If you’re fighting with Claude about the implementation of a linked list that already works, you’re doing vibe coding wrong and you don’t get it.

Vibe coding isn’t about writing code, even though it’s literally using AI to write code. It’s about writing software - which is code that does something useful. When you look at your work through the “software” lens, the “does something useful” is way more important than the “code” part. If your software does the thing it’s supposed to, the code itself is almost unimportant, and if you didn’t even write it yourself it’s not important at all.

We’re used to writing code. It’s hard to stop thinking that you’re writing code when you’re in an IDE looking at code with terminals open everywhere. But you’re not writing code - you’re writing software. If it runs, if it conforms to spec, it’s good. It doesn’t matter what the code looks like (to a point, as discussed). I know that’s hard to accept - until you do, you won’t get vibe coding.

So we just let Claude do what it wants?

No, because Claude is dumber than you, I promise. It just knows more than you and has a better memory. You still need to tell Claude what to build, and how it needs to work. The level of guidance is proportional to the complexity of what you’re trying to do. A Todo web app probably needs minimal guidance, outside of UX design and taste levels. A multi-threaded embeddings database needs architecture guidance, code structure guidance and even guidance on which programming principles to focus on using.

Check Agents Work

So we’re guiding Claude the way a senior would guide a junior through a complex application design. We’re not checking the code itself, just black box testing. This is a kind of checking the agent’s work, however as I discussed above, if you’re building something complex you need to guide more of the technical details of the software you’re building.

How do you do that when I’m also advocating that you don’t care about the code - and even further, that you don’t even look at the code.

Aside from black box testing your software regularly by using it, you should also write automated integration tests. Run them frequently. This will tell you if the software is correct, even if the code isn’t. At this point, if black box testing is failing and you have failing automated tests, it doesn’t matter what the code looks like. The software is broken. Sometimes the software is broken because the software design or the code implementation is a mess.

You shouldn’t be reading the code, because you’re not writing the code. That doesn’t mean that you can’t understand the code. Tell Claude to explain what a code file does. Get it to explain how a grouping of code entities interact. Ask it to trace the logical execution flow through the components it’s written. Get the agent to critique it’s own work. Ask it to explain code to you. Get it to tell you exactly how things work.

There is a certain level of trust required here - you have to trust that Claude is actually reading all code, and correctly understanding all the interactions. When the context window is relatively empty, the LLM is able to attend closely to most tokens in it’s window and it will feel smart, and capable of understanding complex interactions across many entities. As it’s context window fills up, it will get dumber. It will lose attention to important tokens, and it will seem to “overlook” things. It will get lazy, reading less and less code. Reset your session, clear the context, and either continue your analysis or move on to another section if you’re satisfied.

Claude will find design issues. It will find bad abstractions, violations of your documented architecture, potential bugs before the software even runs. Sometimes it feels like vibe coding is performed in layers, in this weird push pull of writing code, analyzing code and fixing code, then repeating. Claude will write code to solve a problem, creating a bunch of smaller problems, which you then later use Claude to fix. Repeat until the problems are small enough that they stop impacting the black box that is your software.

Along the way keep running the code, keep running the tests. This will help keep Claude honest.

The Future of Programming

Programmers are going to be replaced by AI. Not all programmers however, and it’s not too late to ensure you’re part of the cohort that doesn’t get left behind. Those of us who think that their job is to write the best code that they can at any given time need a reality check. Writing code is a commodity now. The fact that you can tell a computer to do things in it’s magical language becomes worthless when you have a rosetta stone of all computer languages that you can use natural language to guide.

The code was never the point - it was the tool we used to build software. Understanding how to build software, understanding how to conceptualize entities in a complex system, being able to break a complex chain of tasks and conditions down into a computationally compatible model, understanding how data is transformed and flows through - this was the point of our education.

Writing software that does useful things, and is maintainable in the long term - that’s the point of everything we do. Up until the mid 2020’s, we used programming languages and compilers and interpreters. Now? We can use AI agents that write that code for us. People who can’t program certainly are.