So How's That Vibe Engineering Thing Going?

This new programming paradigm feels weird, am I doing it right?

This seems to be a theme for me with vibe-coded or “vibe-engineered” projects. I discarded 3 “ralph”s before ultimately deciding that I didn’t want to write a CLI agent for any purpose, let alone programming. I think that space is well covered by now and I feel I’ve learnt all I care to learn about agentic AI construction.

Even though I was starting to get good rendering results with my Vulkan real-time renderer, I hit that damn complexity wall I wrote about. I let the agent get ahead of myself and do architecture work, and it built itself into a corner that I decided I wasn’t skilled enough to get the both of us out of. So I abandoned it and started from scratch. Then abandoned that effort before it even rendered a triangle and switched gears entirely for reasons I’ll get into.

I think it all went wrong when I told the agent to perform an architectural refactor. Reflecting on the three dead ralphs and the first dead renderer (the one from the last post), this is a common theme - an architectural refactor eventually kills the project. I’m starting to refer to this as letting the agent “get ahead” of me - it’s doing things that even I fully don’t understand and it introduces issues that I don’t notice at the time that always come back to bite me in the ass.

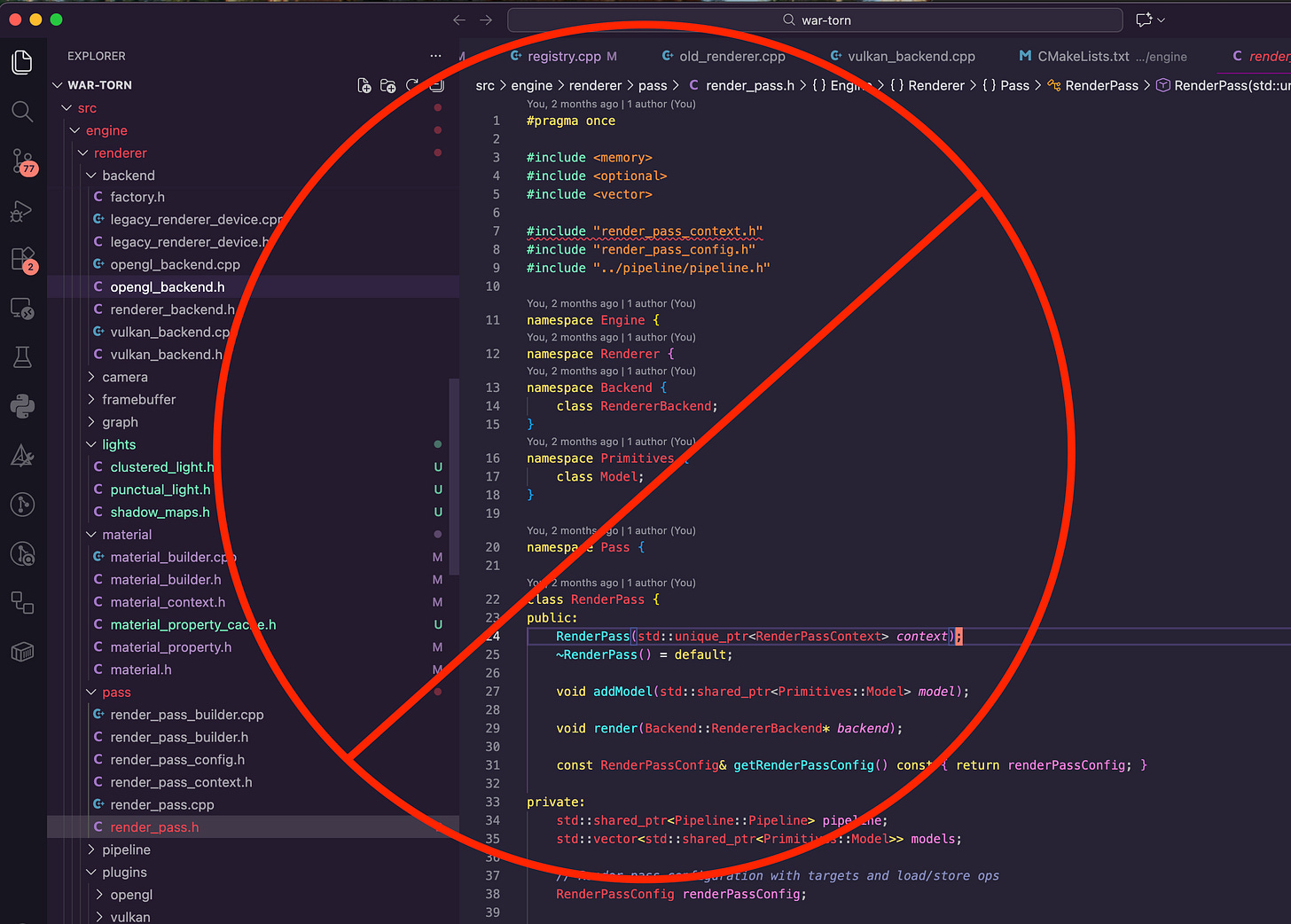

Specifically, I had a a functioning Vulkan renderer after following one of the many Vulkan tutorial websites available on the internet - I think it was vulkan-tutorial.com. My Vulkan C++ code was gross, I’ll admit that. In my defense, these tutorials aren’t really written with the goal of building a well architected performant renderer - they’re designed to get your results fast. I had started to do the re-architecture myself, but I was struggling with how the abstraction should look.

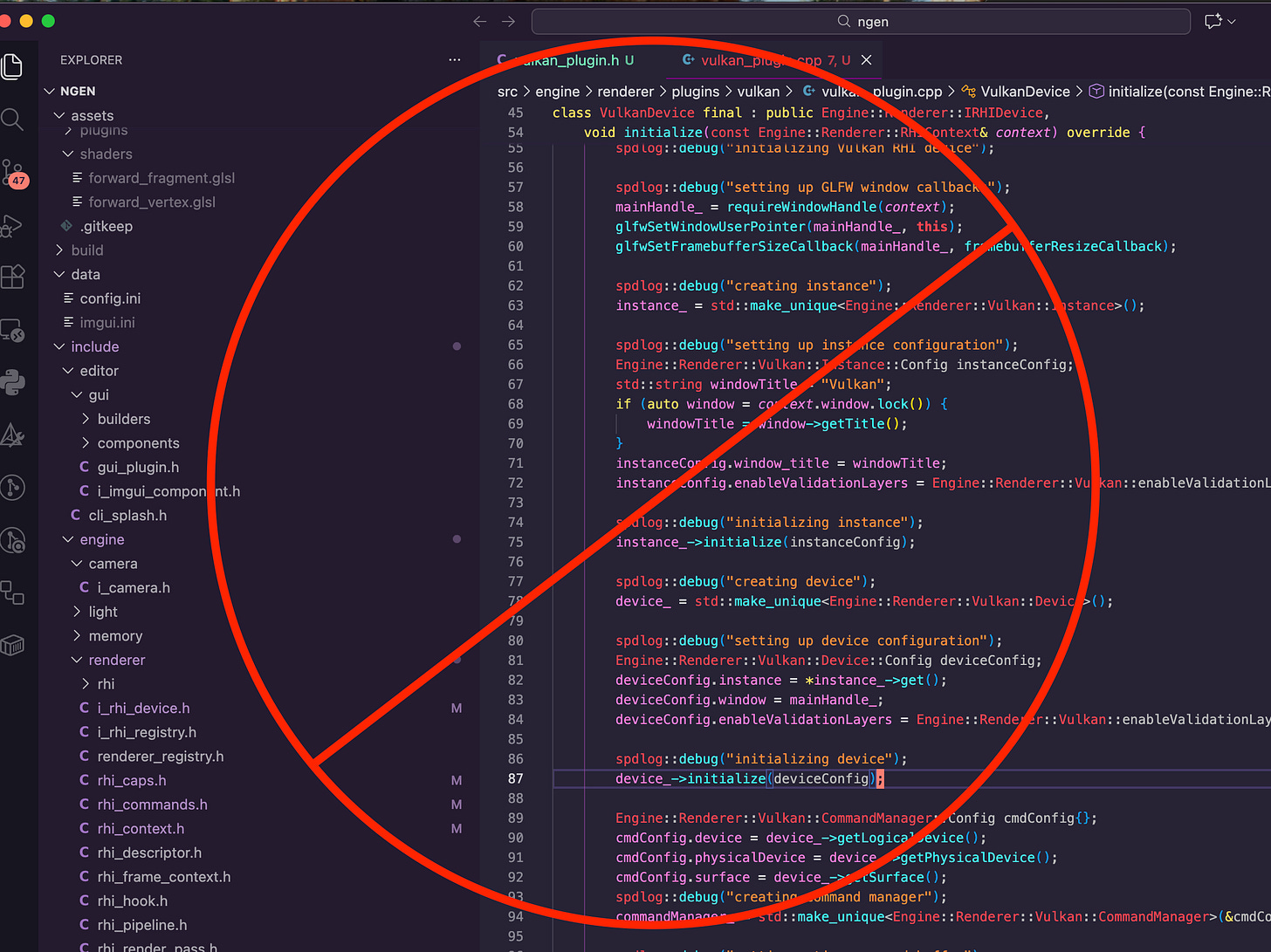

I had a chat with Codex and it informed me of the concept of a “render hardware interface” (RHI), and we chatted about how Unreal/Unity implement their RHIs, and how to implement an RHI in C++. C++ has changed a lot since I lasted spent any significant time programming in it, and so we discussed C++ concepts like PImpl and type erasure. I vaguely understood the agent’s intentions for our abstraction.

Send it. YOLO.

“Write a spec for this refactor, breaking the work down logical chunks that we can implement sequentially”.

I worked interactively with Codex on this task. We iterated through the spec, building abstractions in chunks. At every step of the way I’d compile, run and check the renderer. Every now and again there would be a regression - I’d work with Codex to fix the bug and verify manually. At every logical completion point I committed my work into Git. I believed I generally understood enough of what it was doing that I could debug issues, and the few issues that did come up I managed to successfully debug with the agent. This was going so well.

I posted the “Building New Things” article.

In fact, I wouldn’t notice the issues until Codex and I went to perform a second architectural refactor. I wanted to convert the basic deferred renderer that we had built into a more complex design with sub passes and more efficient CPU/GPU data management and transfer. My lamp was rendering everything but transparent materials correctly, and Codex told me that most professional engines have a dedicated transparency sub pass.

In my mind, it was an upgrade of functionality. Change how we allocate memory and store it CPU side, then break the monolithic pipeline down into multiple passes and leverage the sane, well thought out modular code that Codex wrote to power the existing renderer - you know, the descriptor sets, buffers, render graphs and nodes and all the plumbing to send data to and from the CPU and GPU that Codex uses to render the lamp currently.

Codex had a hell of a time with the change. It wasn’t like the last time I asked an LLM to architect code for me where it was clear the agent was only trying to make tests pass and the build compile. The code was organized well and all advertised functionality was there (if buggy) - I was testing it iteratively by firing up the lamp render. Instead, the agent was having a hard time because it had designed itself into a corner.

I know I wanted to iterate on this renderer. That I wanted to introduce new abstractions and indirect logic, and slowly improve the design and architecture of the renderer as I worked. I’m pretty sure I even told Codex this while we were trying to redesign the renderer. Here’s the thing - I had a fuzzy idea of what I wanted the evolved architecture to look like, even though I’m relying on the agent to guide me. The agent didn’t share that fuzzy idea, and wasn’t aware enough to know that it didn’t share that fuzzy idea.

When you write code, you’re likely keeping a mental model of the way the thing you’re working on works. You’re also keeping a mental model of how things are structured, related to one another, how things depend on each other, and perhaps more importantly, you’re forming opinions about the things you like and the things you don’t like for whatever reason - maybe it’s too hard to understand, maybe it’s fiddly to work with, maybe it’s very smelly code.

When you communicate with other programmers about the code you’re writing you both have a shared mental model of what you’re discussing. Success in the discussion depends on both of you sharing a similar-ish mental model. In business-speak, this is called “alignment”. The programmer you’re communicating with will likely ask questions or bring up discussion points when they get an idea that your mental models don’t match. One of you has a different mental model than the other, and one is likely more correct than the other. The conversation you have updates one or both mental models. Even if you’re both on the same page immediately, there’s a whole wealth of inferred knowledge and assumptions that are at play - we know that x design decision implies later y design decision through experience or knowledge.

AI agents don’t do this.

Specifically, they don’t have a “mental model” to align to yours, or for you to align to. They don’t hold the shared inferred understanding and assumptions you and your human co-worker might.

Which means that you can’t tell if the AI agent understands your intention, and the AI agent can’t really tell you that your understanding of a problem is just fucking dumb.

When you tell an AI agent to do something, your text input gets sent to a generative transformer model that was architected and trained to complete text and has been explicitly fine-tuned to complete chat style messages. The base generative model can complete source code because it was trained on a large corpus of hosted code, code discussions online, and books and media that contain source code.

When your AI agent is producing code, it’s completing a chat style message from the latent space created and encoded in its weights that were creating during the training on the large corpus of code and discussions. Your chat request activates subspaces in the latent space that are relevant to the encoded vectors of your request, and the model pulls the next completion token from that latent space. The reason the quality of the response (how closely aligned is the output code to your mental model) depends on the quality of your prompt is because your encoded text steers where in latent space the generative transformer samples tokens from.

What’s not in this particular section of latent space is the aforementioned mental model of relationships, dependencies, intentions and opinions that you share, and normally your co-worker shares - unless your co-worker is an LLM.

This means that when you tell your AI agent that you’re iteratively writing a renderer, and that right now you want to implement a simple single-pass forward renderer but later intend to make it multi-subpass, you might be forgiven for assuming that your AI co-worker will build this while thinking about the future changes you’ll need to make. You would be mistaken, however. What your AI co-worker will do is essentially fuzzily remember all the previous code it’s seen that matches your expressed goal and use that to write code to complete your task. This code has not been written with your expressed future intentions in mind unless the trained corpus was as well. Typically it has been written as if it was in its final form without any design consideration for iteration, expansion or modification.

My renderer was written as if the single-pass forward renderer was the final state, and so when it came to introducing sub-passes, I found that Codex had written all the CPU/GPU plumbing code and Vulkan boilerplate to be specific to a single-pass forward renderer. The cascading changes required to implement the next iteration of my renderer were intimidating to say the least and Codex couldn’t do it.

I had to make a decision at this point. Do I spent the time to build my own mental model of how rigid everything was and then work through the laborious task of refactoring at least 3 layers of a hardware abstracted renderer? Or do I say “fuck it” and throw it away, and start again using this iteration as a base?

Fuck it.

I restarted the renderer starting from the RHI and slowly built it back up. I implemented a simple ImGUI interface, ensured I could render multiple windows. I started to write the code to set up rendering a triangle.

Then I stopped again.

I was trying to design how to structure uniform buffers and per frame buffers, and trying to build out a render graph. I realized that I didn’t know what should be sent to the shader right now, and I didn’t know what I’d need to send to the shader in the future, and in what shape/structure that should be at either point in time. In the previous iteration, I had the AI agent create my shader program - I’ve written simple BRDF fragment shaders before and I figured I could just pick up the LLM’s work and run with it.

As I sat in front of my laptop, a growing unease started to fill me. I didn’t know enough to guide the AI agent in completing this work in the exact way I wanted it to in order to iterate on it. I also didn’t trust the AI agent to guide me through my iterative architecture. I might be dumb, but I’m not “try the same thing 4 times and expect something different” dumb.

I had conversations with Codex and ChatGPT on the web interface - I discussed architectures, techniques and approaches. Yet, there was still a gap between what I wanted to do, how I understood it should happen and the ability of the AI to understand my intentions. I didn’t know enough to reasonably judge the AI agent’s designs and I didn’t know enough real time rendering nuts-and-bots in order to trust what it said about how to design this software.

I’ve encountered this gap before, and traditionally it would cause me to dig in and really work at understanding the problem conceptually.

Three months ago I would have advocated letting the AI agent do it for you - “you’re focusing too much on the code, trust the AI agent, it can debug it’s work and fix the design”. However between the repeated failed architectures, the obsessiveness with making tests pass and builds work and tendency to just create, create, create code has changed my mind. Now, I understand more guidance from me is necessary to prevent the AI agent from writing a complex, concrete mess that is hard to change.

That doesn’t mean you shouldn’t let AI architect code, especially if you yourself have no idea what the architecture should look like. You just shouldn’t trust it. Use the ability of the AI agent to rapidly spit out code to explore alternate designs and architectures. Treat it as if it were a feature spike, only at a higher level of abstraction. Use the AI to brainstorm architectures and implement them - with each architecture, get it to produce a working proof-of-concept. Pick the one you like the best.

Then throw it away and start fresh.