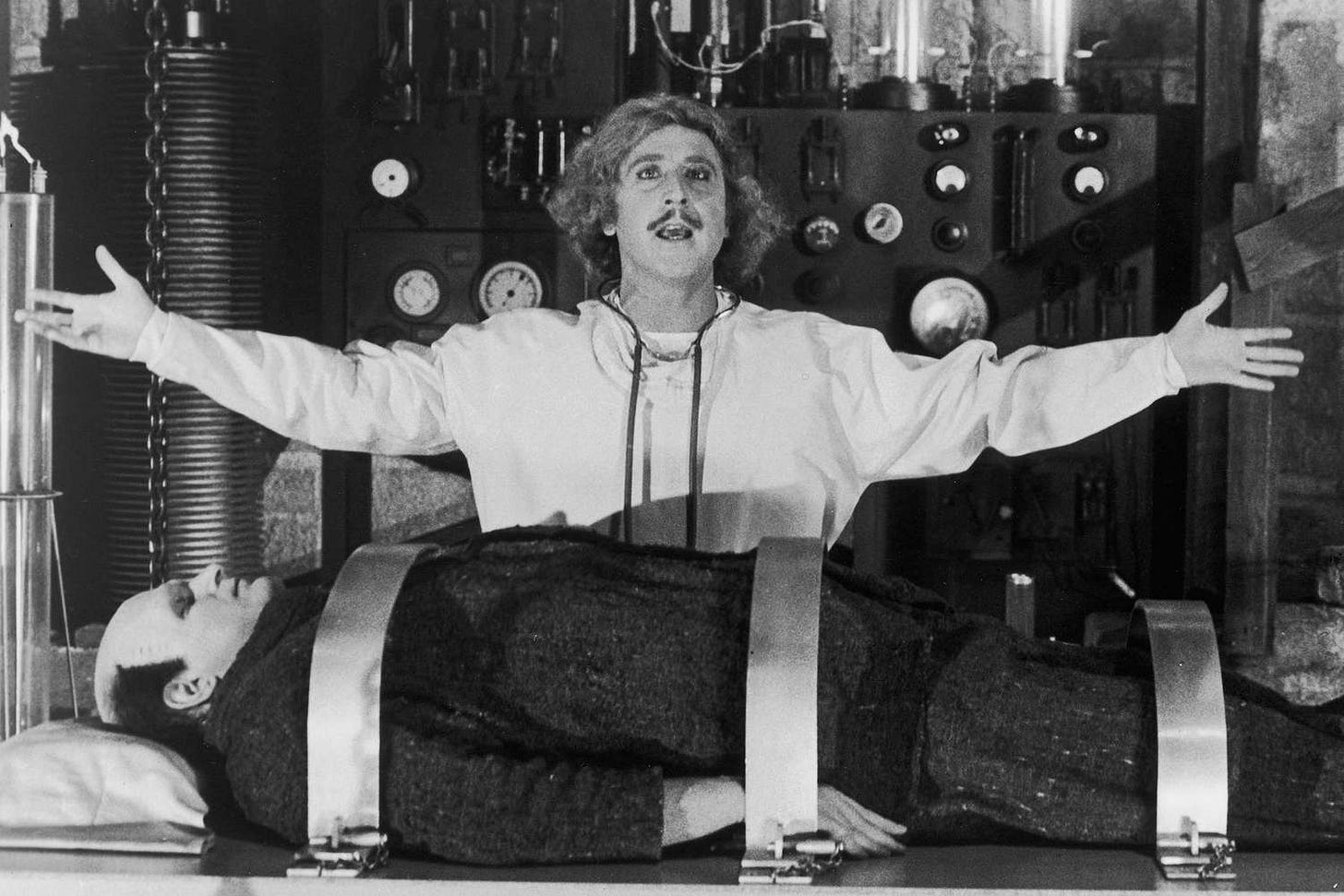

Virtual Insanity

or the Xzibit "Pimp My Ride" meme, can't decide

I don’t just pontificate on software engineering as a career and entrepreneurship. I sometimes also write code. Right now I’m elbow deep inside a fairly …convoluted codebase of my own design. This post is primarily for me to document what the heck is going on so that six-months-from-now-Tim doesn’t want to kill today-Tim.

It all started with Supabase.

There’s only so many times you can write a basic RESTful API server that performs CRUD operations on a database before it stops feeling productive and starts feeling like stupid necessary boilerplate that’s getting in the way of doing real fun stuff. Ditto with authorization and authentication. That’s where Firebase, Pocketbase and Supabase all enter the picture.

These are all kinds of “backend-in-a-box” concept projects. Abstract out auth, data storage and other commonly set-up server related tasks and treat it all as a black box. I get the impression that the primary audience for these projects are mobile and PWA app developers who don’t want to learn a non Kotlin/Swift/Javascript language to write an app that does useful things to the world around it. Even though that’s categorically not me, that doesn’t mean they can’t be useful for other kinds of projects.

I’ve been trying to write some kind of online learning platform app for about 9 months now. Through those 9 months, it’s seen a lot of changes to the overall architecture. I started with a traditional (to me) React SPA communicating with a RESTful Rails API app talking to a Postgres database. I think I was using Keycloak to handle OID auth with the Rails application and I was deep in reverse proxy/HTTP header/browser security hell. While talking to a friend of mine venting my misery, he shared how easy it was to work with his auth, that Firebase took care of pretty much everything.

Supabase had been on my radar around that time. I recall finding out about it on HN and thinking it was pretty nifty, but not for me. Like Firebase, it handled data storage and auth and also provided an edge/serverless compute platform for more specific, non-data related API needs. Unlike Firebase, it wasn’t run by Google and it was self-hostable. I drove home from my friend’s place seething with jealousy and resolved to rip out the entire core of everything I had built to date and replace it with the more well-known and supported Supabase.

Supabase is great. It took a weekend to replace the database/REST API server and get data into and out of the system. Postgres row-level-security (RLS) policies as a way of securing an endpoint was new to me, and kind of frightening if I’ll be honest. However having worked with Hasura in the past, that kind of security model was unfamiliar but not completely foreign. The problems didn’t really start until I started building out the backend serverless compute functions.

The platform I’m building leverages LLM AI technology. I’d be silly to not at least consider it for a new project today but this project is fundamentally infeasible without it. I’m still a little fuzzy on the details of exactly how I’ll be interacting with LLMs and what tasks they’ll be performing for my platform, but I do know that I probably want to leverage some of the software packages that wrangle LLMs such as autogen and outlines. These awesome, Python based software libraries can tame some of the chaos that’s inherent in working with LLMs and they 100% don’t run in Deno, the backend serverless runtime supported by Supabase.

Uh oh.

Firebase can do Python but Firebase is owned by Google and wants to suck you into the GCP. I don’t have experience in GCP and at this stage in my career, I don’t want to gain any unless I have to. Also I’m trying to stick with portable stacks that I own entirely, and GCP and Firebase ain’t that. Pocketbase doesn’t even offer a serverless compute platform which I’m ok with, but it runs primarily on SQLite and I don’t want that. Running a server that runs Python code on demand and integrating it into the security model of Supabase feels like it obviates 75% of the reason I’m even using Supabase. Forking Supabase and swapping in a different runtime felt unnecessarily hard, especially when I considered that without the Deno serverless stuff, Supabase was just Postgres, Postgrest and their own studio app in a trenchcoat.

No worries. Programming is more about thinking and solving problems than typing code anyway. If 75% of the Supabase project does what I need it to and that last 25% is causing me issues, well I can just ignore that 25% and work around it. I figured that integrating a custom edge/serverless platform into Supabase’s Kong reverse proxy would be the hardest part. Starting with just a run time that could launch code without any authentication or authorization should be a pretty easy milestone to hit, right?

Right?

Nested virtualization isn’t new. Entire cloud hosting companies are built on the back of nested virtualization. You buy a beefy bare metal server, install a hypervisor that manages VMs, and sell small slices of your server to customers and isolating workloads into VMs. When I fire up a Devcontainer on Github, I’m connecting to a nested virtualized system. That remote codespace isn’t running in a VM on a bare metal server - it’s running in a VM that’s on another VM. That VM may or may not be served from bare metal.

Actually, Github’s Codespaces feature was a major confounding factor in figuring this problem out. I swap between local and remote dev depending on where I am and whether I’m testing against local or a staging server. I’m a big fan of Codespaces and devcontainers in general. I like the isolation from your host system, I like that you can essentially reset your entire dev env on a whim with no ramifications (if you set your project up well), I like that your toolchain and dependency graph is snapshotted in time with a Docker image. As long as you can store and run that Docker image, you will forever be able to build that piece of software. It’s great.

However what’s not great is that when you’re flying through dozens of documentation tabs, reading about configs and Docker networking and Firecracker and the endless firehose of information that comes with researching something new - your local Mac based devcontainer looks the same as your Github hosted codespace.

But it’s not.

Docker on MacOS run differently on Docker on Linux, mostly due to MacOS not having a Linux kernel and doing virtualization it’s own way. Specifically for me, Linux has a /dev/kvm device and MacOS does not. The ultimate downstream effect being that micro-VM projects such as Firecracker and Kata Containers don’t work on MacOS Docker run Linux containers and they do work on Linux Docker run Linux containers. This proved to be very confusing as I bounced from MacOS devcontainers to Github devcontainers and back. Sometimes the project proof of concept ran and worked (ish), and sometimes it didn’t.

It took me an embarrassingly long time to figure out that the difference in platforms was the root cause of my issue. Probably a week of branching, trying new things, they work, syncing the branch to my local dev, it doesn’t work, delete branch and try again. Figuring out the root cause didn’t actually get me any closer to a solution. Now I’m looking at a fundamental host OS issue that I definitely don’t have the skills to code my way out of.

Doing a bit of research, I stumbled upon gvisor - “Application Kernel for Containers”? That sounds exactly like what I need. From my understanding, gvisor shims most kernel related calls that it deems unsafe (which I think you can configure), and passes the rest through to the underlying system. You may have caught the issue already, and if you have you caught it before I did. It wasn’t until I managed to integrate gvisor and fire up a micro-VM that I realized I still didn’t have a /dev/kvm in my container, so gvisor passing that through wasn’t going to work.

At this point you might be wondering “why not just run your micro-VM related infrastructure on an EC2 or DO droplet?" Why did this all need to be so monolithic and complex when simplicity rules and simplicity says just host it on a Linux VM. If you weren’t, I was. One of the things I liked about the supabase and pocketbase projects is that they were fairly self contained. supabase abstracted their architecture behind a cli tool (but required intimate knowledge of their docker-compose.yml file to integrate for production) and pocketbase is designed to be entirely encapsulated the entire time. Ideally my modifications will continue this self-contained course.

The real need for this clown show of nesting micro-VMs in containers is that at some point I know I’m going to want to run LLM generated code. That’s not something I want to do in any old environment. The runtime for that code needs to be locked down hard. A tenet of good security is layering, and by golly if a jailed process in a micro-VM run by a jailed process in a container ain’t layered, I don’t know what is. I probably could have come up with some kind of system that uses Docker images directly to boot containers that run the given code - in fact I did start down that path however boot times of Docker containers isn’t great.

Ok, so what are we up to? In the above screenshot, it’s not obvious, but I am running VSCode attached to devcontainer dreamy_shirly, which is running on Docker running in the uberbase_dev VM guest running on my Macbook. So that’s… a container in a VM. Where does the other container and the VM fit in?

Well, this final layer is a devcontainer and the container host it’s running in will be running micro-VMs. So really at this point we have a container that runs micro-VMs inside a VM. That’s VM → container → VM. The last container is unaccounted for, until we remember my obsession with packaging things into self contained Docker images. Eventually this entire mess is going to be packaged into it’s own Docker image, right now under the working name of uberbase.

This final Docker image completes the insanity, when you consider I’ll eventually deploy on some kind of cloud host:

VM (micro) → Container → Container → VM (cloud host)

Lets take a step back and evaluate the horrific monstrosity I’ve created.

With this platform, I can install Docker on a host VM running in Digital Ocean or EC2. I can run this image, and I think all I need to do is bind /dev/kvm (right now I’m running --privileged locally which I don’t want to do on prod and I still need to investigate security and jailing) and this image will set up it’s own Docker runtime that it will use to fire off micro-VMs, with the addition of a small Go based API I’ll be writing. This platform will enable me to:

Run arbitrary Docker images on demand with fast boot times

Run arbitrary code in a well defined containerized environment (this is my goal)

Run short or long lived tasks on demand

Furthermore, if I integrate the aforementioned Go API I’m writing, Docker Compose, Postgres, Postgrest, Caddy, an auth provider (I’m leaning toward Authelia right now) and a customized copy of Supabase’s studio, then I’ll also be able to get:

Persistent storage

Authorization and authentication

a RESTful API for data operations

deep integration of the above with the instant runtime monster I built

a snazzy admin web interface for managing the above

and it all should be bundled neatly into a single Docker image that you integrate with via environment variables.

Right now I’m still building the Firecracker bins in my devcontainer on my VM. This thing works but it’s slow. Real, real slow. I’m confident it won’t be this slow in production, because I actually won’t be deploying it this way, inside an open source VM runtime (UTM) on a consumer laptop. I’ll probably end up building the final Docker image so that you can run subsets of services, which will enable me to run the Docker/Firecracker/ignite (custom fork, project is archived) stack on a separate VM/machine from the rest of the stack, yet they will be able to communicate.

If I’ve designed this correctly, I’ll also get some pretty flexible and impressive scaling abilities with this thing and some DevOps know-how:

scalable persistent storage via Postgres and sharding

centralized authorization and authentication

edge compute capabilities (via running partial services on the container in edge nodes)

geo-based load balancing (via custom Caddy configs and your cloud operator’s load balancing options)

This should answer the question “what happens if my little project blows up” out of the box. I’m still working on this and it might not end up working out the way I want, but that’s ok. I mostly just wanted to figure out why this seemed so much harder than it felt like it should.

Right now the code is private, but I do intend on open sourcing it under some kind of license that allows you to use it however you want except to run a service based solely on it. I haven’t ruled out a pivot from my ed-tech idea (and maybe a pivot back after) if this ends up useful and popular and people want to pay me to deploy it for them (basically do what supabase does).

A Week Later

I typically like to sit on blog posts for at least a week if not more. I find after an article is written, the ideas settle and re-organize themselves. I re-read what I wrote making sure it still kind of makes sense and catch the grammatical and spelling errors that I can. I’ll sometimes re-word stuff and move things around to make my point clearer.

All that to say, I’ve been running this nested virtualization stack for a week now and it’s way too slow to use. At first I thought it was because I was using VSCode Tunnels, but switching over to an SSH connected session with my VM is still painfully slow. I’ve reverted to working on a hosted Github Codespace for platform development. If I needed to work locally, I’d source a cheap Linux server or even a cheap Linux laptop and run the virtualization there natively.