Building in Public

A demo-day, of sorts. Maybe the start of something ongoing.

My whole one subscriber and myself have probably noticed that I haven’t written in a while. That’s because I’ve been busy building things. Not only do I stand on digital soap boxes and espouse entrepreneurship to the masses, I also pursue it. I have an LLC, it made money in 2023. Never mind it was a contract I did between jobs and without it I would have run a loss. I’m a business success!

But eeking out $1200 a year doing service work when I get bored isn’t my idea of entrepreneurship. I want something bigger, and so I’m building to something bigger. I’ve been working on some kind of AI integrated learning platform (kind of like Andrej Karpathy but also totally different). I’ve been busy slanging Go and Python around like it’s nobody’s business.

Part of a good AI powered education application is the AI agent, especially if you’re planning on letting your end user talk directly to it. OpenAI, Anthropic, et al, all offer LLMs you can programmatically talk to, and to be honest they’re amazing. That said, there’s a lot of things they just don’t do yet. They don’t have memory (beyond what you keep track of and send it manually) and they don’t really have much agency over the world. Some of them (maybe most or all, I haven’t gone through and checked) have the ability to search the internet and read web pages. Otherwise they can’t do much else apart from outputting text, images and video.

This is not an agent. An agent has context, autonomy and, well, agency. It’s kind of in the name. The cool thing about being a programmer is that I can make all that on top of the impressive LLM tech available to me for a modest fee. That’s what I’ve been doing.

Above is a short debugging session of my AI agent using my AI agent. I’ve always been fascinated by bootstrapping and so this was a no-brainer in my mind.

Even though the debugging session is ultimately unsuccessful (we make progress but the agent slows down way too much to continue to work), I’m really happy with the captured demo. It manages to seamlessly demo some tech that you might not even notice unless you’re familiar with LLMs and AI, and the challenges of integrating them into traditional software.

In case the demo is TLDW for you, or you’re just not that interested in watching me bang on a keyboard for 45 minutes talking to a stochastic parrot, here are the highlights:

Historical awareness of previous conversations - the agent immediately recognizes the previous work we were doing and offers to resume

explicit, then implicit tool usage - despite initial protestation to the opposite, the agent can and does use tools to help the user. Later in the task, the agent consecutively calls different tools to perform a complex task

access to a “knowledge store” to provide access to long-form information that’s too large for a single prompt, and successful retrieval of information from that store (we came so close to fixing the bug, I think, before the agent got too slow and started to bug out)

Contextual understanding of user input - I refer to ‘that file’ and ‘the code under test’ and the agent is able to infer which files I’m talking about based on the current context

As mentioned above, I ultimately consider the session a failure. We didn’t fix the bug, we didn’t have a green run of our test. I’ve used this agent to successfully write and debug code within the last week. So what went wrong?

Before I dive into what went wrong, lets go over how some of the above works:

the user (me) is interacting with

gpt-4o-mini, chosen because it’s context window is fairly large, it’s fairly technologically competitive, and it won’t bankrupt me if the agent decides to enter an infinite loop at the speed of silicon.all interactions between the user and the agent are stored in a local ChromaDB vector database, with embedding tokens generated using OpenAI’s

text-embedding-3-large model, chosen due to it’s larger context size. Right now everything is aggressively chunked to aid in retrieval, but we’ll get back to that.on start-up, the agent pulls the last 24 hrs of interaction history from ChromaDB and inserts it into it’s prompt formatted as a simple timestamped chat log. The history is sorted by recency.

also on start-up after the interaction history is pulled, the agent makes an out-of-band LLM request to come up with questions that will allow it to pull interaction history out of ChromaDB that is outside the 24-hour window. The agent then queries ChromaDB with these questions and inserts these “memories” into it’s prompt (so far with no special formatting). These “memories” are ordered by a custom ranking algorithm based on cosine-similarity but with bias for recency.

lastly, the user is able to store information into ChromaDB with the agent on demand using tooling. This is also aggressively chunked. I mention this only because it’s relevant to the problem.

I won’t go into how the tooling works or how the back and forth between the user and agent work to form a coherent conversation. Many pixels have been used to document that information and it’s a simple search engine query away.

As for what went wrong - well, I recorded that video this morning. I don’t know exactly what went wrong, but I have a series of hypothesis I need to test:

I changed how I deal with OpenAI’s context window token limits, and more sneakily and annoying than I expected, their tokens-per-minute request limit. Before, if a message was larger than the context window, I cut the history in half and dropped the oldest, recalculated the prompt and sent it to OpenAI. This resulted in the agent being really smart and pleasant to work with for a long while, then occasionally it will get real dumb for a conversation iteration or two, then get smart again (I will henceforth refer to this as a “brain fart”). I attributed this to how I was managing the conversation history, so I refactored it to more incrementally drop history.

After that, the agent seemed really smart and stopped having brain farts. I was really feeling more productive while working with the agent than without it. Then I hit the OpenAI tokens-per-minute (TPM) errors. I didn’t know they throttled you from consuming too many tokens too quickly, and none of my agent was designed with this in mind. I decided to use my tokens more efficiently, and refactored how ChromaDB documents were chunked. While I was in there, I decided to separate documents and chat history into different collections.

Since that change, we no longer hit token limits, and the “brain farts” I see seem to be more to do with bugs in how the tooling system feeds results back to the agent. But it’s not as smart. I have to remind it about it’s capabilities more frequently, and I have to request it to pull information to get a better context of our work.

Ultimately I think splitting the documents out from the chat history was a mistake. I am also toying with the idea of not chunking chat history, since in the really long messages in the history contain so much contextual information that it’s worth dealing with using more tokens and getting closer to the TPM more frequently.

I plan on fixing that error, and improving the agent by working with the agent on my codebases (right now, the agent). Did I mention “agent” enough to pop up in Google for it yet? Ultimately the agent is going to be plugged into my learning platform and used to help both teachers and students by leveraging it’s ability generate content and interact with computing systems. I might even end up releasing the agent as a product once I feel it’s powerful enough.

What does this cost to run, you might be wondering. I was certainly wondering it this morning as I was watching it spin through writing a test, running a test, writing a test, running it, etc. That’s why I stopped it in the video - I was getting nervous about the cost.

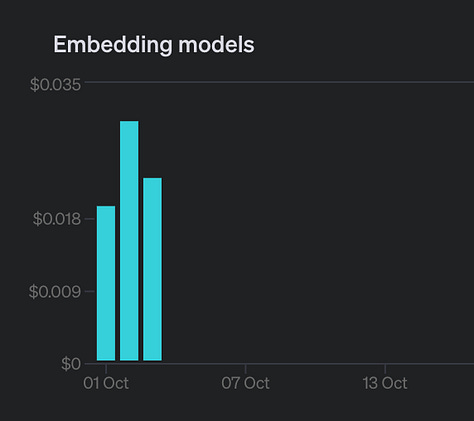

Tada:

You can see the spend dropped when I implemented my chunking and history changes. Even before that, using it heavily for around 6 hours I racked up a $2 bill. At 8 hours a day, that’s $3 a day or ~$90 a month if I used it all day, every day. I believe that if a tool you use is good enough to use every day, all day, then it’s worth at least $90 a month.

And it’s fun to use.